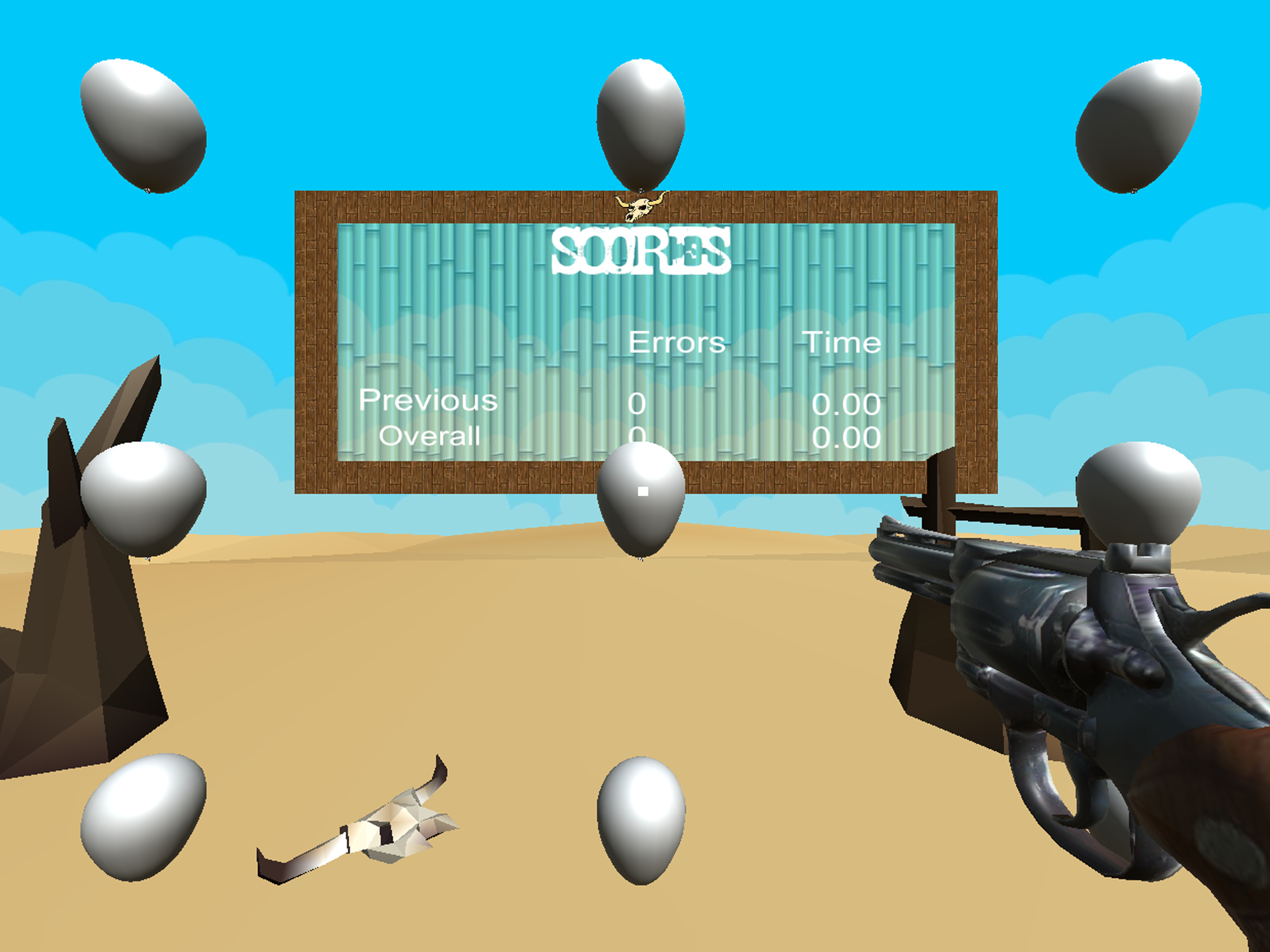

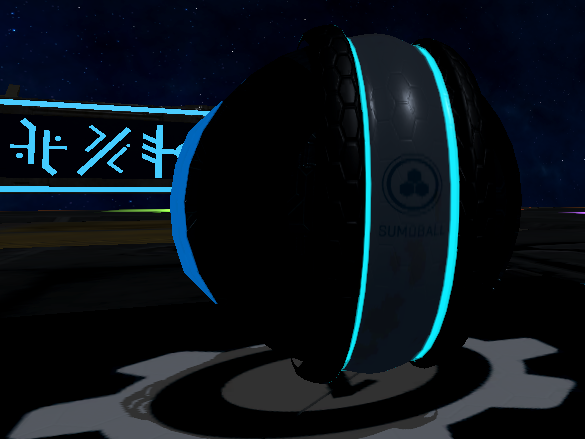

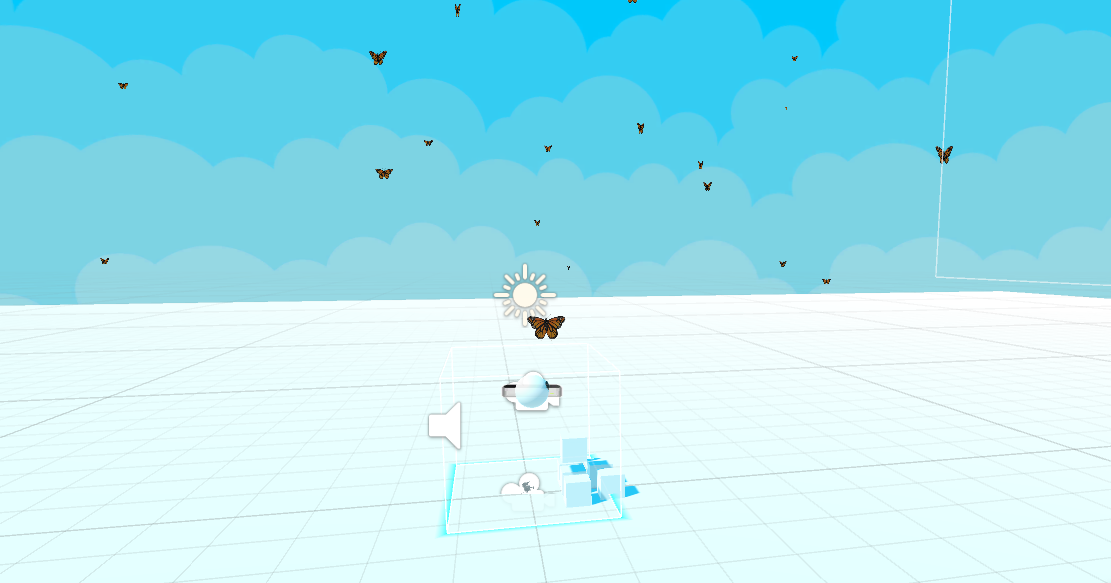

This research project focuses on the use of emerging virtual reality technologies to support building of virtual sensory rooms for rehabilitating cognitively. The specific aims and objectives are outlines in the next section, followed by a survey on the state of the art on virtual reality tools and (physical) sensory rooms. Based on this a prototype framework will be developed based on full integration of Unity and HTC Vive and Leap motion to support creation of several virtual perception areas populated with a range of visual stimuli (both basic and more complex). Using this methodology an artefact will be built that can serve as a proof of concept for building similar artefacts in future towards a full validation of the framework.

Please see THIS LINK to read the full dissertation and learn more about the tool.